After switching from macOS to Manjaro on my MacBook Pro I was in need of a truly encrypted back-up solution. After considering a host of backup tools, including

Restic, I opted for a less mainstream tool which supports blake2 encryption, gives you your private key, and, as an added bonus, churns out the smallest backups possible for use in cloud storage scenarios:

BorgBackup.

In this post I’ll cover how to migrate encrypted Borg backups from any system which can run MinIO to a cloud services provider offering 500GB object storage for less than 6€ per month: Scaleway – a service brought to my attention by a friend and fellow After Dark user named Teo.

Read on to learn how to create Borg backups with MinIO and Scaleway.

Getting Started

Do the following before you continue:

- Create an account on Scaleway

- Provision Object Storage bucket (500GB)

- Create bucket called borgbackup

- Create API Token (Public/Secret Key)

- Install Borg on the backup machine

- Initialize Borg repo with blake2 encryption

- Export and record

BORG_KEYto repository - Save the password used somewhere safe too

- Create first archive using borg create

If you chose not to use Scaleway – and given they require a credit card I can’t say I blame you – these steps may be adjusted for other storage providers with just a little bit of your own imagination.

Syncing Backups with rclone

rclone dubs itself “rsync for cloud storage”. The idea is “it just works”. But that wasn’t my experience. Here’s why…

Install rclone on Manjaro or Arch Linux:

sudo pacman -S rclone

You should see output like:

Expand to view output

resolving dependencies...

looking for conflicting packages...

Packages (1) rclone-1.48.0-1

Total Installed Size: 44,40 MiB

:: Proceed with installation? [Y/n]

(1/1) checking keys in keyring [#####################] 100%

(1/1) checking package integrity [#####################] 100%

(1/1) loading package files [#####################] 100%

(1/1) checking for file conflicts [#####################] 100%

(1/1) checking available disk space [#####################] 100%

:: Processing package changes...

(1/1) installing rclone [#####################] 100%

:: Running post-transaction hooks...

(1/1) Arming ConditionNeedsUpdate...Configure rclone for scaleway using rclone config. You should end up with a config similar to the following:

Remote config

--------------------

[scaleway]

type = s3

provider = Scaleway

env_auth = false

access_key_id = SCWXXXXXXXXXXXXXX

secret_access_key = 1111111-2222-3333-44444-55555555555555

region = fr-par

endpoint = s3.fr-par.scw.cloudCopy backup to Scaleway bucket using rclone copy as defined in borg usage:

rclone copy /home/"$(whoami)"/backup scaleway:borgbackup

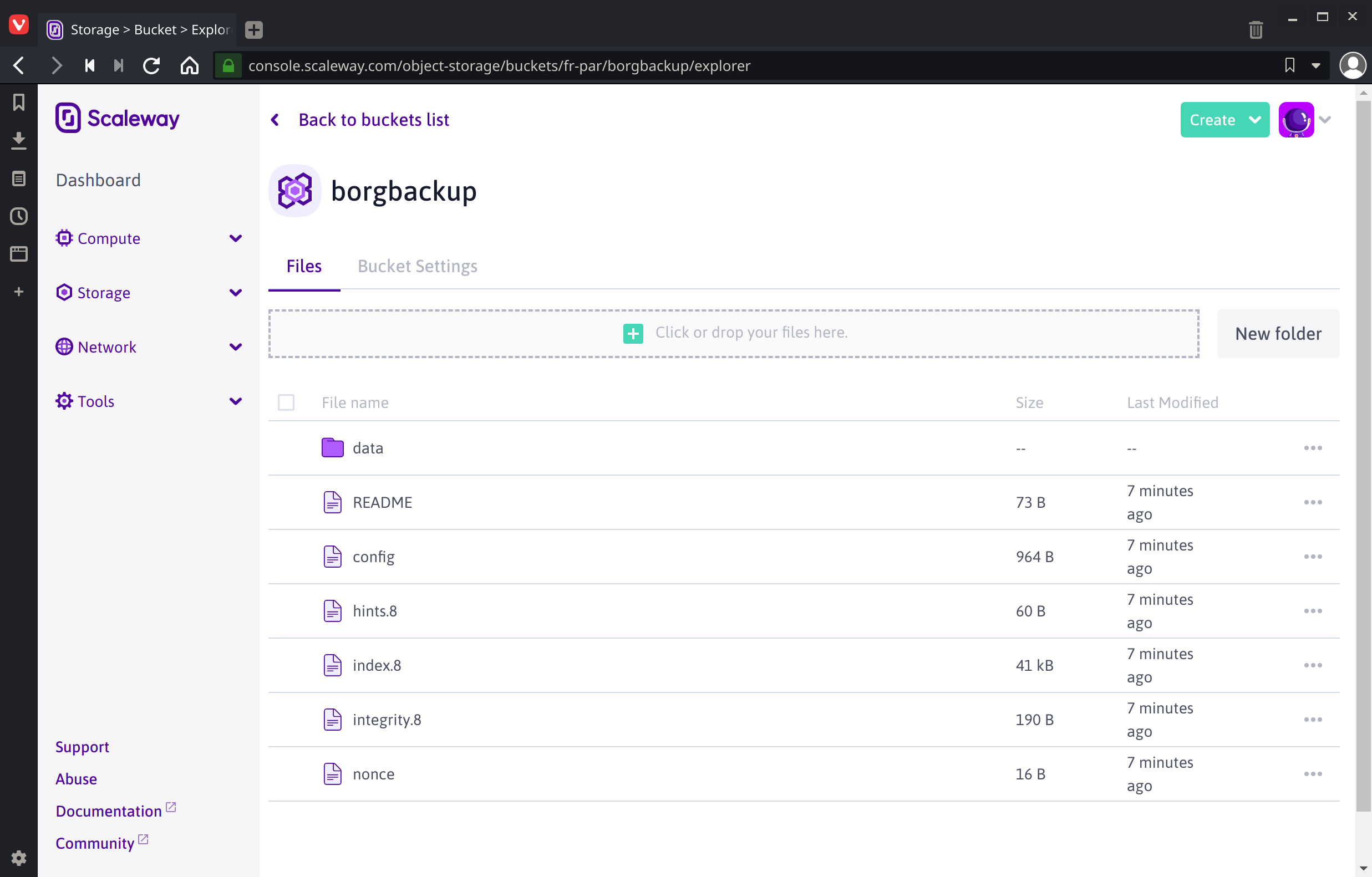

The contents of the borg backup repo should appear in the Scaleway bucket:

The first time I tried this the rclone process hung … indefinitely even though the data seems to have been transferred to Scaleway. Not very encouraging, but it seems I’d forgotton to pass the --progress flag, had no clue how long the transfer would take and grew impatient.

After 10 minutes I interrupted the process with Ctrl + C then ran rclone sync in an attempt to identify possible data inconsistencies.

The sync process failed abruptly and spit out an unintelligible error:

2019/08/07 18:33:21 Unsolicited response received on idle HTTP channel starting with "HTTP/1.0 408 Request Time-out\r\nCache-Control: no-cache\r\nConnection: close\r\nContent-Type: application/xml\r\n\r\n<?xml version=\"1.0\" encoding=\"UTF-8\"?>\n<Error>\n <Code>RequestTimeout</Code>\n <Message>Client request timeout</Message>\n</Error>\n"; err=<nil>

I’m sure rclone’s a great tool but that’s not exactly the kind of first-time user experience I’m looking for so I decided to try mounting a Scaleway bucket to the local filesystem instead.

Syncing Backups with s3fs

Run sudo pacman -S s3fs to install FUSE. You should see output like:

Expand to view output

resolving dependencies...

looking for conflicting packages...

Packages (1) s3fs-fuse-1.85-1

Total Download Size: 0,21 MiB

Total Installed Size: 0,57 MiB

:: Proceed with installation? [Y/n]

:: Retrieving packages...

s3fs-fuse-1.85-1-x86_64 210,0 KiB 123K/s 00:02 [#####################] 100%

(1/1) checking keys in keyring [#####################] 100%

(1/1) checking package integrity [#####################] 100%

(1/1) loading package files [#####################] 100%

(1/1) checking for file conflicts [#####################] 100%

(1/1) checking available disk space [#####################] 100%

:: Processing package changes...

(1/1) installing s3fs-fuse [#####################] 100%

:: Running post-transaction hooks...

(1/1) Arming ConditionNeedsUpdate...If you do, follow Scaleway’s instructions on Using Object Storage with s3fs to mount your S3-compatible bucket to your local host machine’s file system.

Should you find Scaleway’s setup instructions on the skimpy side as I did a visit to the expansive s3fs --help should help get you squared away.

Here’s the mount command I cobbled together after combining docs:

sudo s3fs borgbackup: /run/media/"$(whoami)"/scaleway-s3fs \

-o passwd_file=${HOME}/.passwd-s3fs,url=https://s3.fr-par.scw.cloud

Le voilà ! The contents copied to the bucket earlier with rclone synced automatically to the s3fs mount. To verify list directory contents:

sudo ls /run/media/"$(whoami)"/scaleway-s3fs

Given the earlier rclone copy contents of the FUSE volume should look like:

config data hints.8 index.8 integrity.8 nonce README

Depending on your DE you may experience issues with your FUSE volume after trying to browse it using a graphical interface. Subsequent ls commands may result in:

ls: reading directory '/run/media/user/scaleway-s3fs': Input/output error

Unless the entire FUSE volume is remounted:

sudo s3fs unmount /run/media/"$(whoami)"/scaleway-s3fs \

-o passwd_file=${HOME}/.passwd-s3fs,url=https://s3.fr-par.scw.cloud \

-o nonempty

sudo s3fs borgbackup: /run/media/"$(whoami)"/scaleway-s3fs \

-o passwd_file=${HOME}/.passwd-s3fs,url=https://s3.fr-par.scw.cloud \

-o nonempty

And while remounting fixed the issue with ls using a FUSE volume in this context felt even more kludgy than dealing with nondescript rclone errors. There has to be a better way. And thankfully there is using MinIO.

Migrating Data with MinIO

Until now we’ve been trying to convert files for use with object storage while simultaneously transferring them to an s3-compatible cloud storage provider. Given the issues we saw earlier a hunch tells me what we should be doing instead is converting the files to object storage locally prior to copying migrating the files the object data to the remote. And that’s where

MinIO comes in.

Install MinIO packages

Install the following two packages using your favorite package manager:

- minio

- Object storage server compatible with Amazon S3

- minio-client

- Replacement for ls, cp, mkdir, diff and rsync commands for filesystems and object storage

Then run minio server and point it at the borg repository:

minio server /home/"$(whoami)"/backup

You should see output like:

Expand to view output

Endpoint: http://10.99.71.226:9000 http://192.168.43.121:9000 http://127.0.0.1:9000

AccessKey: REDACTED

SecretKey: REDACTED

Browser Access:

http://10.99.71.226:9000 http://192.168.43.121:9000 http://127.0.0.1:9000

Command-line Access: https://docs.min.io/docs/minio-client-quickstart-guide

$ mc config host add myminio http://10.99.71.226:9000 REDACTED REDACTED

Object API (Amazon S3 compatible):

Go: https://docs.min.io/docs/golang-client-quickstart-guide

Java: https://docs.min.io/docs/java-client-quickstart-guide

Python: https://docs.min.io/docs/python-client-quickstart-guide

JavaScript: https://docs.min.io/docs/javascript-client-quickstart-guide

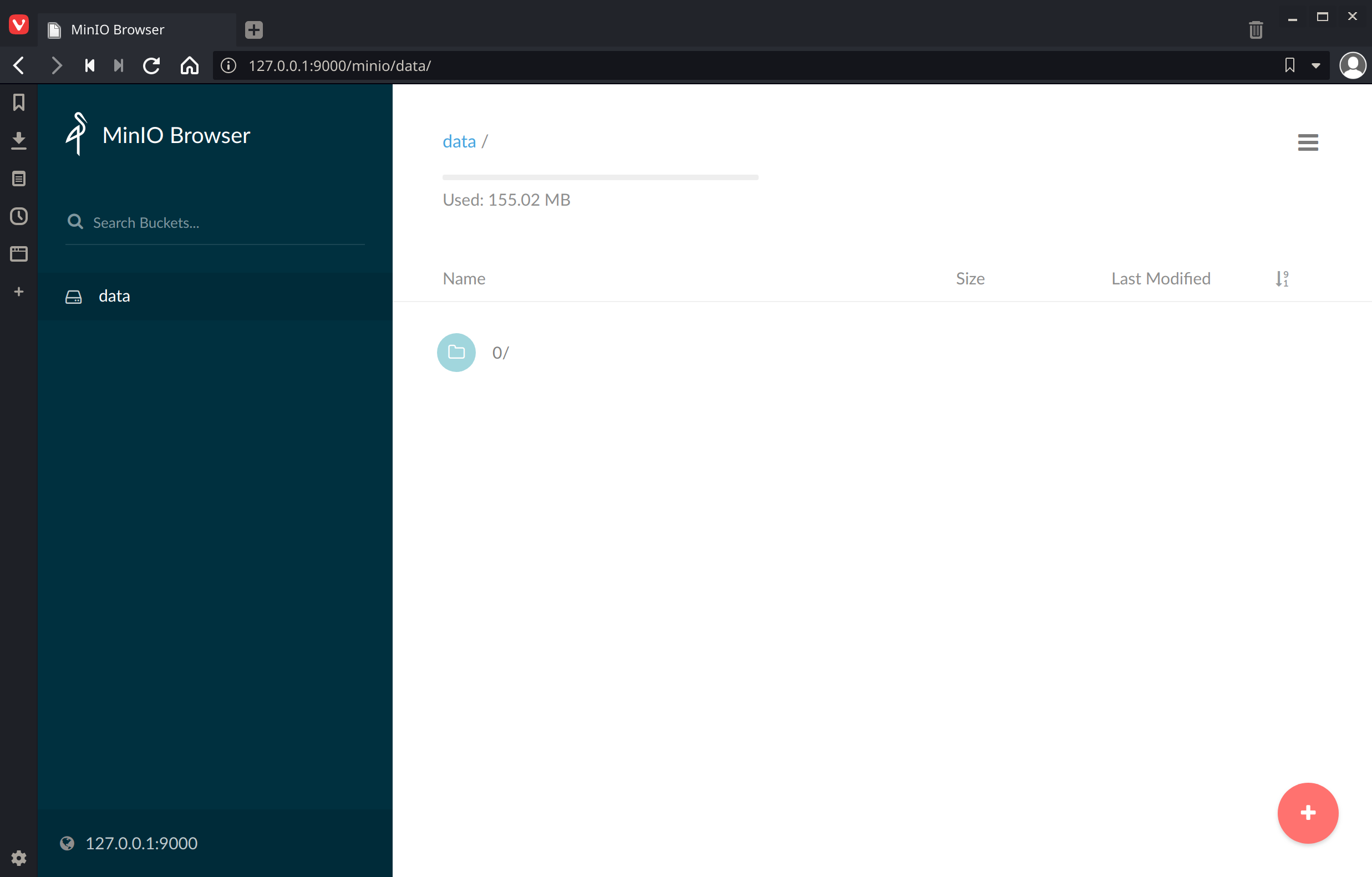

.NET: https://docs.min.io/docs/dotnet-client-quickstart-guideNavigate to http://127.0.0.1:9000 and confirm the contents are as expected:

If you’re looking at bucket called data, you’ll know that’s not the entire borg repository as it’s missing files we saw earlier using rclone and s3fs.

To access the entire borg repository contents nest the contents of the backup folder into a subdirectory aptly named borg using one of these jobs:

cd ~ && \

mkdir borg && \

mv backup/* "$_" && \

mv borg backup

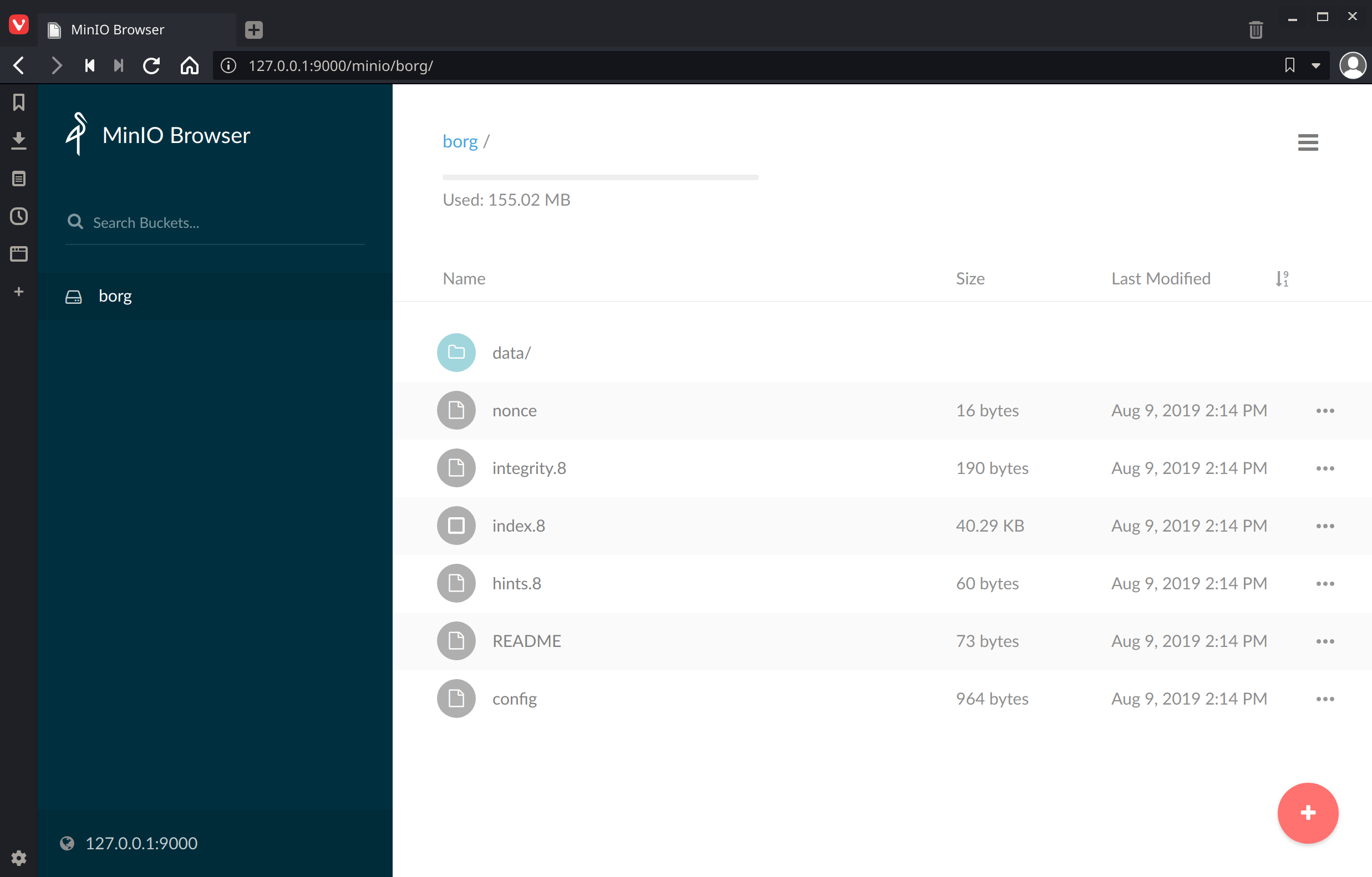

At which point refreshing MinIO Browser should show something like:

If that’s what you see you’re ready to migrate the backup data to Scaleway.

Migrate backup data

With minio server still running with its borg bucket intact go ahead and run through the config steps detailed in Migrating Object Storage data with Minio Client on the Scaleway website.

Next add your running MinIO host to the config as well using the Command-line Access info output to the console earlier when starting MinIO:

mc config host add myminio http://10.99.71.226:9000 ACCESS_KEY SECRET_KEY

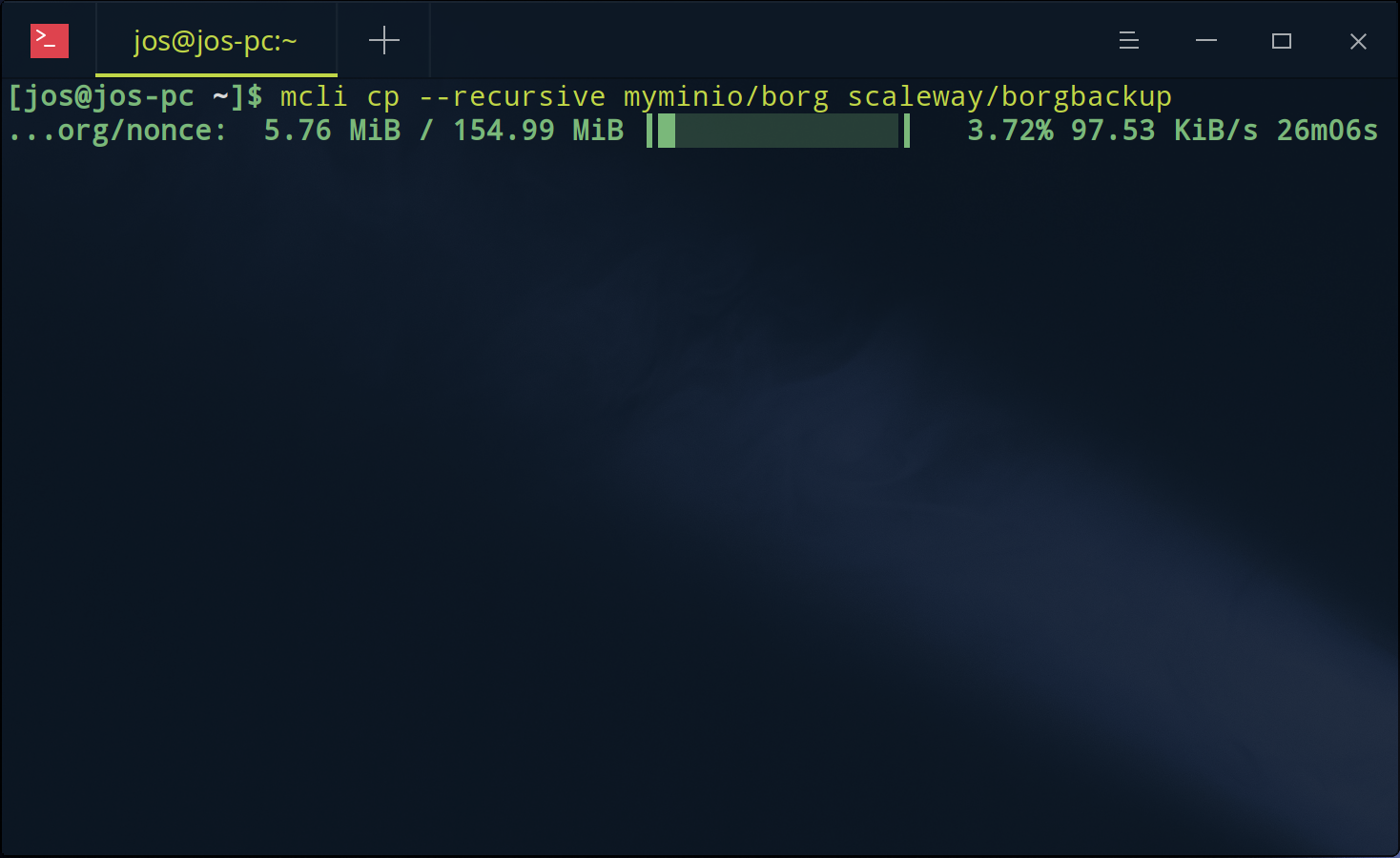

Finally, perform the migration like so:

mc cp --recursive myminio/borg scaleway/borgbackup

And hang tight while the data is migrated to Scaleway with progress indication:

Given borg deduplicates and compresses its results with (or without) encryption your transfer should finish faster than uncompressed backups and additionally save you space in your cloud storage bucket.

Other Storage Providers

If Scaleway isn’t for you, there are other options you may wish to consider for object storage depending on your own unique needs.

Managed S3 object storage providers you may wish to consider:

- Wasabi, by far the most cost effective for back-ups.

- Digital Ocean Spaces.

- Amazon S3, which offers a 12-month free tier for new users.

- Object Storage on Vultr is also possible.

Self-hosted S3-compatible object storage possibilities:

- Self-hosted MinIO server on your own ODROID microcomputer.

- OpenStack Swift, if you’re ready to drink that Kool-Aid.

Tips

If you need to migrate the data in the opposite direction simply swap the order of the buckets when running the mc cp command to migrate data.

Borg does not recommend syncing with a cloud storage service in a sense – they suggest creating multiple distinct repositories (with separate repo ID, separate keys) so something bad happening in repo1 will not influence repo2.

Looking for a GUI to make life easier? Automate your backups with Borgmatic, a CLI client for BorgBackup, or Vorga if you’d like a GUI.

Summary

In this tutorial I’ve shown you how to use MinIO and Minio Client to back-up Borg backup data to a s3-compatible remote object store on Scaleway. I’ve also covered two other potential techniques for copying data to Scaleway and their drawbacks which could lead to potential data loss if not handled with diligence and care.

While I may be using the above techniques presented for system backups they could be used for more. In fact, with 500GB of object storage you could potentially host a website on S3, externalize git LFS file blobs, or even host a massive collection of random media for public or private use.

Use your imagination and go borg wild.